Iperf is a network performance measurement and optimization tool.

The iperf application is a cross-platform program can provide standard network performance metrics. Iperf comprises a client and a server that can generate a stream of data to assess the throughput between two endpoints in one or both directions.

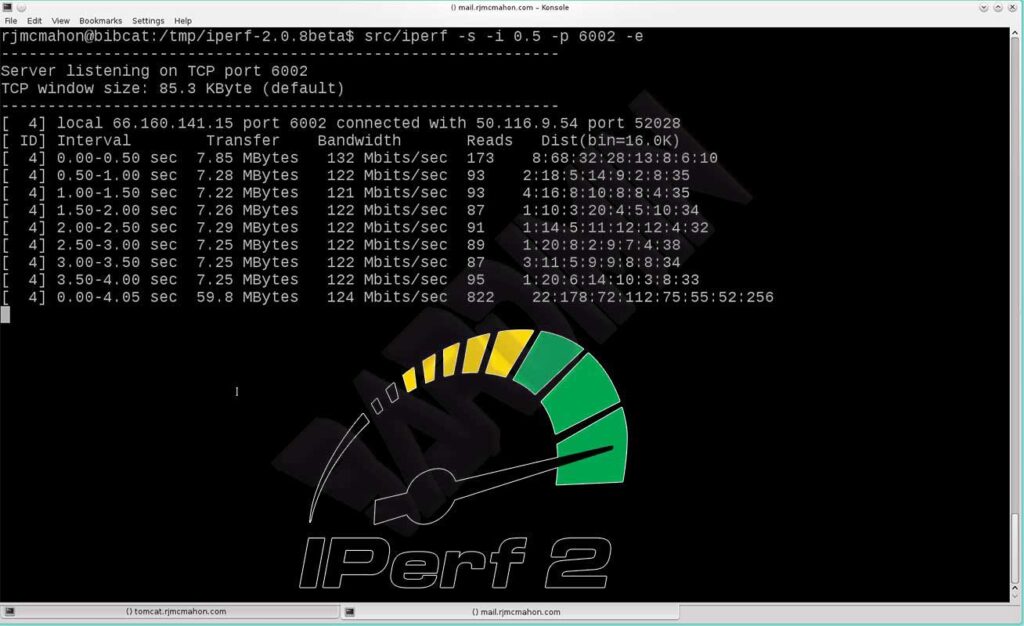

A typical iperf output includes a stamped time report of the amount of data transported and the measured throughput.

Iperf2

Iperf2 is a network throughput and responsive measurement tool that supports TCP and UDP. One of its goals is to keep the iperf codebase functioning on various platforms and operating systems.

It is a multi-threaded architecture that grows in proportion to the number of CPUs or cores in a system with which it can get and report network performance using high- and low-impact strategies.

Features of Iperf2

- Supports smaller report intervals (100 us or greater, configure –enable-fast sampling for high-precision interval time output)

- Supports SO_RCVTIMEOUT for report servers regardless of no package

- Support SO_SNDTIMEO when sending so that socket writes won’t block beyond -t or -i

- Supports SO_TIMESTAMP for kernel-level package timestamps

- Supports end/end latency in mean/min/max/stdev (UDP) format (-e required) (assuming client and server clocks are synchronized, e.g. with Precision Time Protocol to OCXO per Spectracom oscillator)

- Supports TCP-level limited flows (via -b) using a simplified token bucket

- Supports packets per second (UDP) over pps as units, (e.g. -b 1000pps)

- Show PPS in client and server (UDP) reports (-e required)

- Supports real-time schedulers as command-line options (–real-time or -z, assuming proper user rights)

- Display target loop time in the initial client header (UDP)

- Add local support of ipv6 links (eg. iperf -c fe80::d03a:d127:75d2:4112%eno1)

- UDP ipv6 payload defaults to 1450 bytes per one ethernet frame per payload

- Support isochronous traffic (via –isochronous) and frame burst with variable bit rate (vbr) traffic and frame id

- SSM multi-cast support for v4 and v6 uses -H or -ssm-host, ie. iperf -s -B ff1e::1 -u -V -H fc00::4

- Latency histograms for packets and frames (e.g. –udp-histogram=10u.200000, 0.03, 99.97)

- Support for timed delivery starts per –txstart-time <unix.epoch time>

- Support for clients that increase destination IP with -P via –incr-dstip

- Support for varying the load is offered using the normal distribution of logs around the mean and standard deviation (per -b <mean>,<stdev>),

- Honor -T (ttl) for unicast and multicast

- UDP uses a 64-bit sequence number (although it still operates with 2.0.5 which uses a seq number of 32b.)

Iperf2 supported operating systems

- Linux, Windows 10, Windows 7, Windows XP, macOS, Android, and some OS set-top boxes.

Download Iperf2

Iperf3

The Iperf3 application is a rewrite of iperf from scratch to create a smaller and simpler codebase.

iPerf3 is a tool for measuring the maximum possible bandwidth on an IP network in real-time. It allows you to fine-tune various timings, buffers, and protocols (TCP, UDP, SCTP with IPv4 and IPv6). And it will also provide reports of bandwidth, losses, and other metrics for each test.

Features of Iperf3

- TCP and SCTP (Measure bandwidth, Report MSS/MTU size and observed read size, Support for TCP window size over socket buffer).

- UDP (Client can create UDP flow from specified bandwidth, Measure packet loss, Measure delay jitter, Capable multicast)

- Both the client and the server can have several simultaneous connections (-P option).

- The server handles multiple connections, rather than stopping after a single test.

- Can run for a specified time (option -t), rather than any amount of data to be transferred (option -n or -k).

- Periodic print, medium bandwidth, jitter, and loss reports at specific intervals (option-i).

- Run the server as a daemon (-D option)

- Use representative flows to test how link layer compression affects achievable bandwidth (-F option).

- A server receives one client simultaneously (iPerf3) and multiple clients simultaneously (iPerf2)

- Ignore TCP slow-start (-O option).

- Set the target bandwidth for UDP and (new) TCP (option -b).

- Set IPv6 flow label (-L option)

- Set the congestion control algorithm (-option -C)

- Use SCTP instead of TCP (–sctp option)

- The output is in JSON format (-J option).

- Disk read test (server: iperf3 -s / client: iperf3 -c testhost -i1 -F filename)

- Disk write test (server: iperf3 -s -F filename / client: iperf3 -c testhost -i1)